Top 10 Facts About the History of Computers? The history of computers spans several centuries, beginning with the first mechanical devices to modern-day machines. The evolution of computers has been fascinating, with each new invention building on the previous one.

Contents

- 1 1. Computers

- 2 2. The First Mechanical Computers

- 3 3. The Invention of the First Electronic Computer

- 4 4. The Emergence of Personal Computers

- 5 5. The First Laptops

- 6 6. The Rise of Mobile Computing

- 7 7. The Internet and the World Wide Web

- 8 8. The Evolution of Gaming

- 9 9. Artificial Intelligence and Machine Learning

- 10 10. Future of Computing

- 11 Conclusion

1. Computers

Computers have come a long way since their inception in the 19th century. Today’s computers are faster, more powerful, and more versatile than ever before, and they have transformed nearly every aspect of our lives. From business and education to entertainment and communication, computers are an integral part of modern society.

Computers operate using a combination of hardware and software. The hardware consists of the physical components of the computer, including the processor, memory, storage, and input/output devices. The software is the set of instructions that tells the computer what to do, and it can be divided into two categories: system software and application software.

System software is responsible for managing the computer’s resources and ensuring that all of the hardware components work together. This includes the operating system, device drivers, and utility programs. Application software, on the other hand, is designed to perform specific tasks or functions, such as word processing, web browsing, or gaming.

One of the most significant advancements in computing is the internet. The internet is a vast network of interconnected computers and servers that allows people to communicate and share information with each other from virtually anywhere in the world. This has revolutionized the way we work, learn, and socialize, and it has created new opportunities for businesses and individuals alike.

As computers continue to evolve, we can expect to see even more significant advancements in the years to come. From artificial intelligence and machine learning to quantum computing and virtual reality, the future of computing is full of exciting possibilities that will continue to shape the world around us.

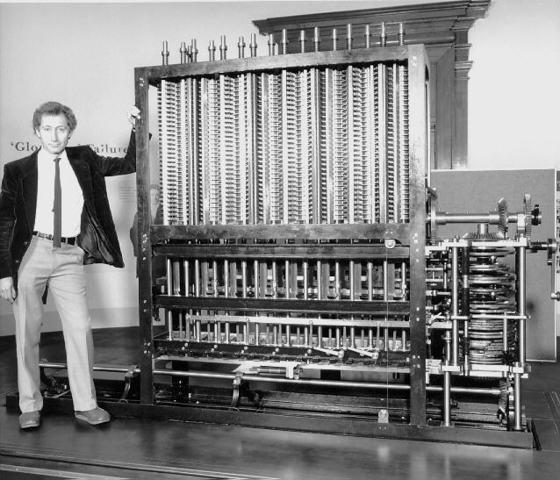

2. The First Mechanical Computers

The first mechanical computers were developed in the 19th century by British inventor Charles Babbage. Babbage designed a series of machines that he called the “difference engine” and the “analytical engine.” These machines were designed to perform mathematical calculations using a system of gears and levers, rather than the electronic circuits used in modern computers.

The difference engine was designed to calculate polynomial functions, while the analytical engine was a more advanced machine that could perform more complex operations. Babbage’s designs were groundbreaking for their time and laid the foundation for the development of modern computing.

Unfortunately, Babbage was never able to complete his designs, as funding for his projects was limited and he faced numerous technical challenges. However, his work was not forgotten, and his designs inspired future inventors and engineers to continue developing mechanical computing machines.

One of Babbage’s contemporaries, an engineer named George Scheutz, built a working version of the difference engine in the 1850s. The machine was used to calculate logarithmic tables, which were essential for navigation and other scientific calculations.

In the years that followed, other inventors and engineers continued to refine and improve upon Babbage’s designs. In the late 19th and early 20th centuries, mechanical computing machines were used extensively in businesses and scientific laboratories for tasks such as accounting and data analysis.

While mechanical computing machines have largely been replaced by electronic computers, their impact on the development of computing cannot be overstated. Without the early pioneers like Charles Babbage and George Scheutz, the modern computing landscape would look very different.

3. The Invention of the First Electronic Computer

The invention of the first electronic computer is credited to a team of engineers and scientists led by John W. Mauchly and J. Presper Eckert at the University of Pennsylvania in the late 1940s. The machine they developed, known as the Electronic Numerical Integrator and Computer (ENIAC), was the first general-purpose electronic computer.

Prior to the development of the ENIAC, computers were primarily mechanical or electro-mechanical in nature, relying on gears, levers, and relays to perform calculations. However, these machines were slow and limited in their capabilities, and there was a growing need for faster and more versatile computing machines.

The ENIAC was designed to solve this problem. It used vacuum tubes to perform calculations electronically, making it faster and more reliable than earlier mechanical computers. The machine was also programmable, meaning that it could be reprogrammed to perform different tasks, making it more versatile than earlier machines.

The ENIAC was a massive machine, taking up an entire room and weighing over 30 tons. It was also incredibly expensive to build, costing over $500,000 (equivalent to over $6 million today). Despite these challenges, the machine was a significant achievement and paved the way for the development of modern electronic computers.

In the years that followed, Mauchly and Eckert went on to develop other important computing machines, including the UNIVAC, which was the first commercially available computer. The development of these machines revolutionized the world of computing, making it possible to perform complex calculations and process vast amounts of data quickly and efficiently.

Today, electronic computers are an essential part of modern society, used in nearly every industry and aspect of daily life. From personal computers and smartphones to supercomputers and data centers, the legacy of the ENIAC lives on in the many electronic computing machines that have followed in its footsteps.

4. The Emergence of Personal Computers

The emergence of personal computers (PCs) in the 1970s and 1980s marked a significant shift in the computing landscape. Prior to the development of the PC, computers were large, expensive, and primarily used by businesses and government agencies.

The first commercially successful PC was the Altair 8800, developed by MITS (Micro Instrumentation and Telemetry Systems) in 1975. The Altair was a kit that users could assemble themselves, and it was designed primarily for hobbyists and electronics enthusiasts.

However, it was the release of the Apple II in 1977 that marked the true emergence of the personal computer. The Apple II was the first mass-produced PC, and it was designed to be accessible and user-friendly. It was also the first PC to include color graphics and a built-in keyboard, making it a more versatile and functional machine.

The success of the Apple II inspired other companies to enter the market, including IBM, which released the IBM PC in 1981. The IBM PC was a more business-oriented machine, and it was designed to be compatible with existing IBM software and hardware.

The emergence of personal computers had a profound impact on society, democratizing access to computing power and giving individuals and small businesses the ability to perform complex calculations and process data. It also paved the way for the development of the internet and other digital technologies that have transformed nearly every aspect of modern life.

Today, personal computers continue to evolve and improve, with faster processors, better graphics, and more advanced features. From laptops and desktops to tablets and smartphones, personal computers are an essential part of our daily lives, and they continue to shape the world around us in new and exciting ways.

5. The First Laptops

The first laptops, also known as portable computers or notebook computers, were developed in the 1980s. The concept of a portable computer had been around since the 1970s, but it wasn’t until advances in technology and miniaturization that the first practical laptops were developed.

The first commercially successful laptop was the Grid Compass, released by Grid Systems Corporation in 1982. The Grid Compass was designed to be portable, with a weight of just under 5 kg, and it featured a clamshell design with a built-in display and keyboard.

However, the Grid Compass was expensive and primarily used by the military and government agencies. It wasn’t until the release of the Toshiba T1100 in 1985 that laptops became more affordable and accessible to the general public. The T1100 was one of the first laptops to feature a lightweight design and long battery life, making it ideal for business travelers and students.

Throughout the 1980s and 1990s, laptops continued to evolve and improve, with smaller form factors, faster processors, and better displays. The introduction of wireless networking in the 2000s made laptops even more versatile and useful, allowing users to connect to the internet and other networks from anywhere.

Today, laptops are an essential tool for students, professionals, and anyone who needs to work or access the internet on the go. From lightweight ultrabooks to powerful gaming laptops, there is a wide range of options to choose from, and laptops continue to evolve and improve with advances in technology.

Read More: 10 Tips for Utilizing NLP Annotation Tools in the Future

6. The Rise of Mobile Computing

The rise of mobile computing refers to the increasing popularity of mobile devices such as smartphones and tablets, which allow users to access the internet and perform a wide range of tasks on the go.

Mobile computing can be traced back to the development of personal digital assistants (PDAs) in the 1990s, which were small handheld devices that could be used to store and manage information. However, it wasn’t until the release of the first smartphones in the 2000s that mobile computing really took off.

The first smartphones were developed by companies like BlackBerry and Nokia and were primarily used for email and messaging. However, the release of the iPhone in 2007 revolutionized the mobile computing industry. The iPhone was the first smartphone to feature a large, high-resolution touch screen, and it was designed to be user-friendly and easy to use.

The success of the iPhone inspired other companies to enter the market, and today there are a wide range of smartphones available from companies like Samsung, LG, and Google. Smartphones are more powerful than ever before, with fast processors, high-quality cameras, and advanced features like facial recognition and augmented reality.

In addition to smartphones, tablets have also become popular mobile computing devices. Tablets are larger than smartphones and offer a more immersive experience, making them ideal for activities like reading, watching videos, and playing games.

The rise of mobile computing has had a profound impact on society, allowing people to stay connected and productive no matter where they are. It has also transformed industries like entertainment, with streaming services like Netflix and Spotify becoming increasingly popular on mobile devices.

As mobile technology continues to evolve, it is likely that mobile computing will become even more central to our daily lives. From wearable devices like smartwatches to the rise of 5G networks, there are a wealth of new technologies on the horizon that will shape the future of mobile computing.

7. The Internet and the World Wide Web

The internet and the World Wide Web (WWW) are two separate but related technologies that have revolutionized the way we communicate, share information, and conduct business.

The internet is a global network of interconnected computers and servers that allows users to exchange information and communicate with each other. It was developed in the 1960s by the US Department of Defense as a way to connect its various research facilities, and it quickly spread to universities and other organizations around the world.

The World Wide Web, on the other hand, is a system of interlinked hypertext documents accessed via the internet. It was developed in the late 1980s by British computer scientist Tim Berners-Lee, who was looking for a way to share scientific research more easily. The first website was created in 1991, and the WWW quickly became a powerful tool for sharing information and connecting people across the globe.

Today, the internet and the World Wide Web are essential components of modern life. They have revolutionized the way we communicate, allowing us to connect with people around the world via email, social media, and video conferencing. They have also transformed industries like entertainment, with streaming services like Netflix and YouTube becoming increasingly popular.

The internet has also had a profound impact on business, enabling companies to sell products and services to customers around the world and facilitating the rise of e-commerce giants like Amazon and Alibaba.

However, the internet and the World Wide Web have also raised concerns about privacy, security, and the spread of misinformation. Issues like cybercrime, online harassment, and fake news have become increasingly prevalent, highlighting the need for better regulation and safeguards.

Despite these challenges, the internet and the World Wide Web continue to evolve and transform the way we live, work, and communicate. From the growth of cloud computing to the rise of artificial intelligence, there are a wealth of new technologies on the horizon that will shape the future of the internet and the World Wide Web.

8. The Evolution of Gaming

The first video game, Spacewar!, was invented in 1962. Since then, gaming has evolved into a multi-billion dollar industry. Today’s games are incredibly complex and realistic, with advanced graphics and artificial intelligence.

9. Artificial Intelligence and Machine Learning

Artificial intelligence (AI) and machine learning have revolutionized the way we interact with computers. AI is used in everything from speech recognition to self-driving cars, and machine learning allows computers to learn and improve without being explicitly programmed.

10. Future of Computing

The future of computing is exciting and full of possibilities. From quantum computing to brain-computer interfaces, the next generation of computers will be faster, more powerful, and more intuitive. Quantum computing has the potential to solve problems that are currently unsolvable by classical computers, while brain-computer interfaces will allow us to control computers using our thoughts.

Conclusion

The history of computers is a fascinating journey that spans over several decades. From the first mechanical calculators to the powerful smartphones in our pockets today, computers have undergone significant changes and advancements that have transformed the world around us.

In the early days, computers were primarily used for scientific research and military applications. However, as they became smaller, more affordable, and more powerful, they began to revolutionize the way we work, communicate, and conduct business.

One of the most significant developments in computer history was the invention of the microprocessor, which paved the way for the emergence of personal computers and laptops. These devices brought the power of computing into homes and businesses around the world, allowing people to access information and communicate with each other more easily than ever before.

The rise of mobile computing, with the introduction of smartphones and tablets, has taken this even further, giving us the ability to stay connected no matter where we are.

The internet and the World Wide Web have also played a crucial role in the development of computers, transforming the way we access and share information and enabling the rise of e-commerce, social media, and online entertainment.

As computers continue to evolve and advance, we can expect to see even more exciting developments in the future. From artificial intelligence and virtual reality to quantum computing and the Internet of Things, the possibilities are endless.